Review: DataRobot aces automated machine learning

Knowledge science is nothing if not cumbersome, in everyday apply. The original tedium is made up of obtaining information related to the issue you are trying to design, cleaning it, and obtaining or setting up a very good set of options. The up coming tedium is a subject of trying to educate each individual attainable device learning and deep learning design to your information, and finding the greatest handful of to tune.

Then you require to have an understanding of the products well plenty of to make clear them this is primarily significant when the design will be encouraging to make lifestyle-altering choices, and when choices may well be reviewed by regulators. Ultimately, you require to deploy the greatest design (typically the a person with the greatest precision and satisfactory prediction time), monitor it in generation, and increase (retrain) the design as the information drifts over time.

AutoML, i.e. automated device learning, can speed up these processes dramatically, at times from months to several hours, and can also lessen the human needs from skilled Ph.D. information experts to a lot less-skilled information experts and even business analysts. DataRobot was a person of the earliest vendors of AutoML alternatives, despite the fact that they generally phone it Company AI and ordinarily bundle the software program with consulting from a properly trained information scientist. DataRobot did not cover the full device learning lifecycle to begin with, but over the several years they have obtained other companies and built-in their goods to fill in the gaps.

As shown in the listing down below, DataRobot has divided the AutoML course of action into 10 measures. Although DataRobot statements to be the only vendor to cover all 10 measures, other vendors might beg to differ, or give their very own services as well as a person or a lot more third-social gathering services as a “best of breed” system. Rivals to DataRobot include (in alphabetical order) AWS, Google (as well as Trifacta for information planning), H2O.ai, IBM, MathWorks, Microsoft, and SAS.

The 10 measures of automatic device learning, in accordance to DataRobot:

- Knowledge identification

- Knowledge planning

- Element engineering

- Algorithm variety

- Algorithm selection

- Coaching and tuning

- Head-to-head design competitions

- Human-friendly insights

- Straightforward deployment

- Model checking and management

DataRobot system overview

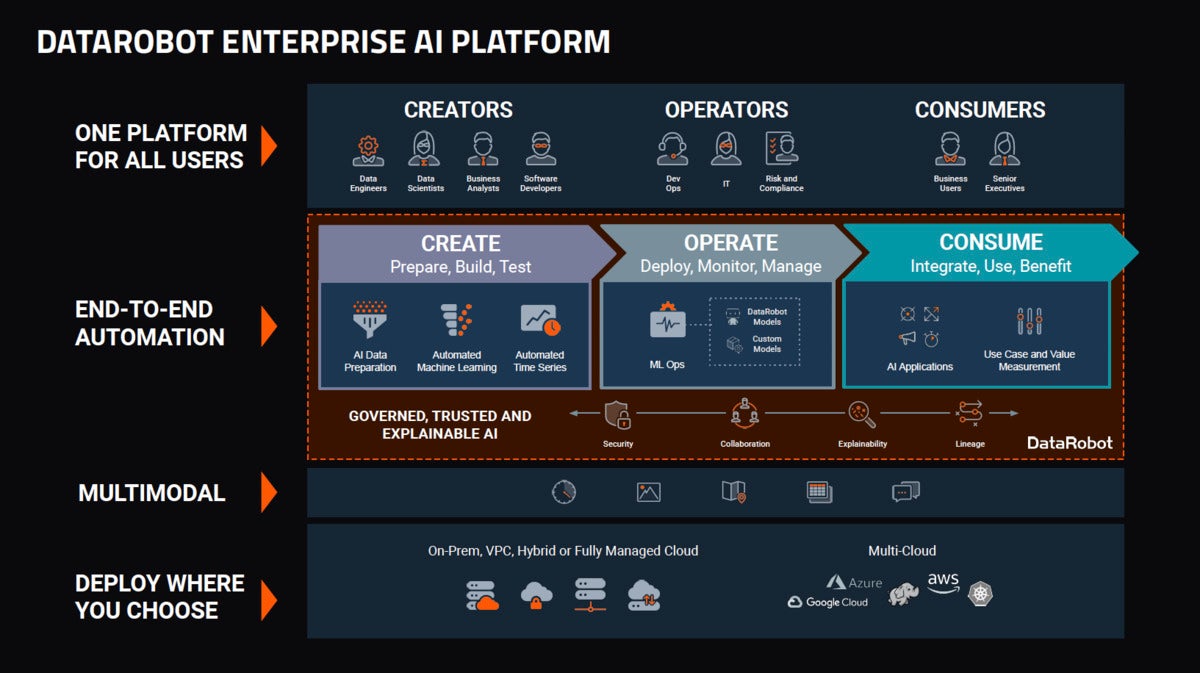

As you can see in the slide down below, the DataRobot system tries to tackle the demands of a wide variety of personas, automate the entire device learning lifecycle, offer with the concerns of design explainability and governance, offer with all forms of information, and deploy very a lot any place. It largely succeeds.

DataRobot aids information engineers with its AI Catalog and Paxata information prep. It aids information experts primarily with its AutoML and automatic time collection, but also with its a lot more superior selections for products and its Trustworthy AI. It aids business analysts with its quick-to-use interface. And it aids software program developers with its capacity to integrate device learning products with generation units. DevOps and IT advantage from DataRobot MLOps (obtained in 2019 from ParallelM), and chance and compliance officers can advantage from its Trustworthy AI. Business consumers and executives advantage from improved and more rapidly design creating and from information-driven conclusion producing.

Finish-to-conclusion automation speeds up the entire device learning course of action and also tends to produce improved products. By immediately teaching a lot of products in parallel and utilizing a large library of products, DataRobot can at times obtain a a lot improved design than skilled information experts teaching a person design at a time.

In the row marked multimodal in the diagram down below, there are 5 icons. At initially they puzzled me, so I requested what they imply. Basically, DataRobot has products that can manage time collection, illustrations or photos, geographic facts, tabular information, and text. The astonishing bit is that it can incorporate all of all those information kinds in a solitary design.

DataRobot provides you a preference of deployment areas. It will operate on a Linux server or Linux cluster on-premises, in a cloud VPC, in a hybrid cloud, or in a completely managed cloud. It supports Amazon World wide web Providers, Microsoft Azure, or Google Cloud Platform, as well as Hadoop and Kubernetes.

DataRobot

DataRobotDataRobot system diagram. Numerous of the options were additional to the system as a result of acquisitions, such as information planning and MLOps.

Paxata information prep

DataRobot obtained self-service information planning organization Paxata in December 2019. Paxata is now built-in with DataRobot’s AI Catalog and feels like component of the DataRobot solution, despite the fact that you can continue to obtain it as a standalone solution if you desire.

Paxata has three capabilities. Very first, it allows you to import datasets. 2nd, it lets you explore, cleanse, incorporate, and affliction the information. And third, it allows you to publish well prepared information as an AnswerSet. Every single step you perform in Paxata produces a variation, so that you can constantly carry on to do the job on the information.

Knowledge cleaning in Paxata involves standardizing values, removing duplicates, obtaining and fixing glitches, and a lot more. You can condition your information utilizing equipment this sort of as pivot, transpose, team by, and a lot more.

The screenshot down below shows a true estate dataset that has a dozen Paxata processing measures. It begins with a house price tabular dataset then it adds exterior and interior illustrations or photos, gets rid of unneeded columns and undesirable rows, and adds ZIP code geospatial facts. This screenshot is from the Residence Listings demo.

IDG

IDGPaxata allows the consumer to build AnswerSets from datasets a person step at a time. The Paxata equipment all have a GUI, despite the fact that the Compute software lets the consumer enter easy formulas or establish superior formulas utilizing columns and capabilities.

DataRobot automatic device learning

Basically, DataRobot AutoML functions by likely as a result of a few of exploratory information investigation (EDA) phases, determining insightful options, engineering new options (primarily from date kinds), then trying a lot of products with smaller quantities of information.

EDA period one operates on up to 500MB of your dataset and supplies summary data, as well as examining for outliers, inliers, surplus zeroes, and disguised lacking values. When you choose a target and strike operate, DataRobot “searches as a result of thousands and thousands of attainable combinations of algorithms, preprocessing measures, options, transformations, and tuning parameters. It then utilizes supervised learning algorithms to review the information and determine (apparent) predictive relationships.”

DataRobot autopilot method begins with 16{36a394957233d72e39ae9c6059652940c987f134ee85c6741bc5f1e7246491e6} of the information for all correct products, 32{36a394957233d72e39ae9c6059652940c987f134ee85c6741bc5f1e7246491e6} of the information for the prime 16 products, and sixty four{36a394957233d72e39ae9c6059652940c987f134ee85c6741bc5f1e7246491e6} of the information for the prime 8 products. All results are displayed on the leaderboard. Quick method operates a subset of products on 32{36a394957233d72e39ae9c6059652940c987f134ee85c6741bc5f1e7246491e6} and sixty four{36a394957233d72e39ae9c6059652940c987f134ee85c6741bc5f1e7246491e6} of the information. Manual method provides you total handle over which products to execute, such as precise products from the repository.

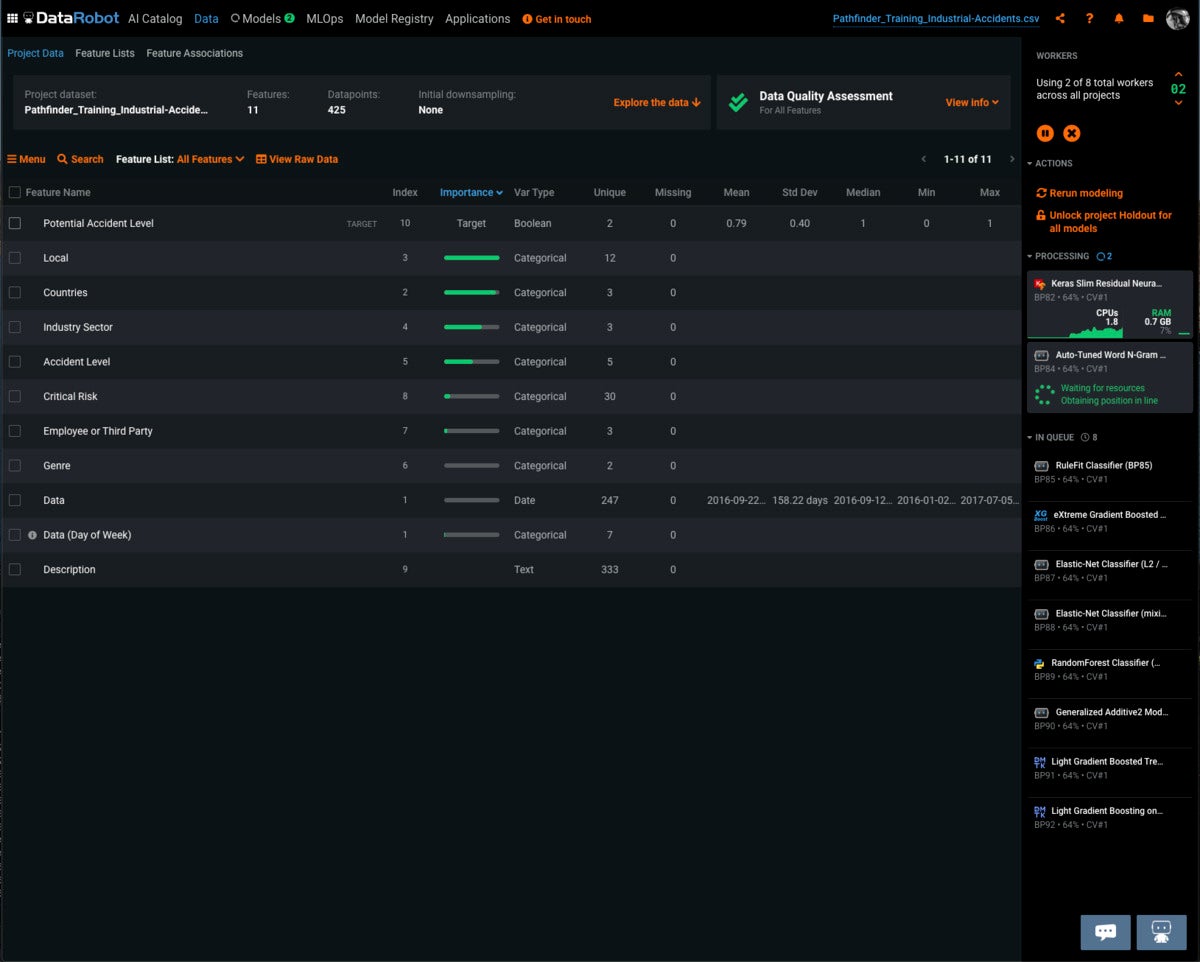

IDG

IDGDataRobot AutoML in action. The products becoming properly trained are at the right, together with the proportion of the information becoming employed for teaching just about every design.

DataRobot time-conscious modeling

DataRobot can do two forms of time-conscious modeling if you have date/time options in your dataset. You ought to use out-of-time validation (OTV) when your information is time-related but you are not forecasting (alternatively, you are predicting the target worth on just about every personal row). Use OTV if you have solitary party information, this sort of as affected individual consumption or mortgage defaults.

You can use time collection when you want to forecast numerous long term values of the target (for illustration, predicting revenue for just about every working day up coming week). Use time collection to extrapolate long term values in a continual sequence.

In standard, it has been challenging for device learning products to outperform regular statistical products for time collection prediction, this sort of as ARIMA. DataRobot’s time collection features functions by encoding time-sensitive elements as options that can contribute to everyday device learning products. It adds columns to just about every row for illustrations of predicting distinct distances into the long term, and columns of lagged options and rolling data for predicting that new length.

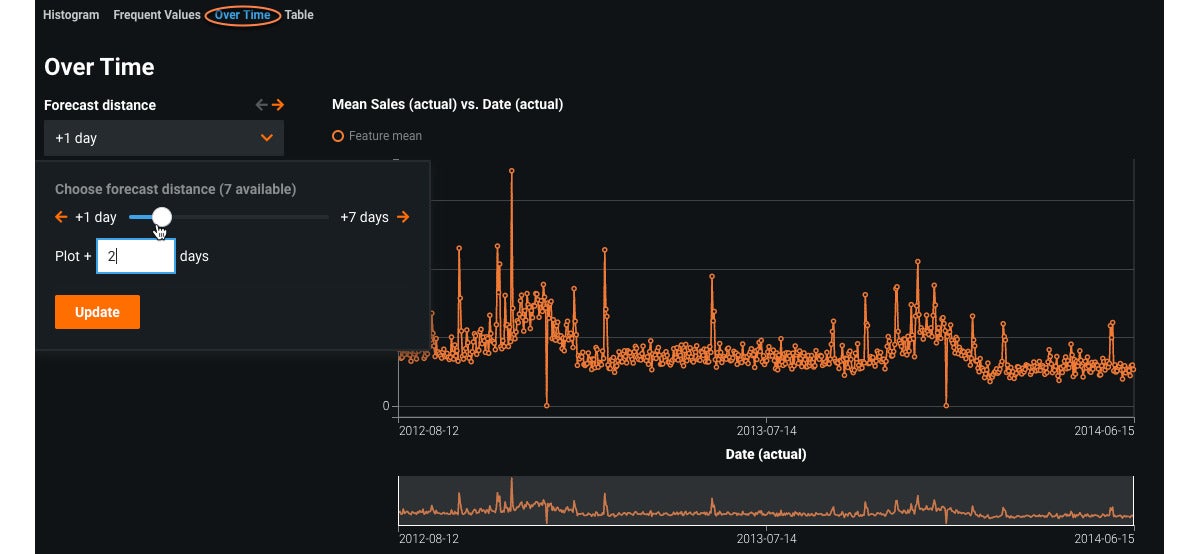

IDG

IDGValues over time graph for time-connected information. This aids to determine developments, weekly designs, and seasonal designs.

DataRobot Visible AI

In April 2020 DataRobot additional picture processing to its arsenal. Visual AI allows you to establish binary and multi-class classification and regression products with illustrations or photos. You can use it to establish totally new picture-based products or to insert illustrations or photos as new options to current products.

Visible AI utilizes pre-properly trained neural networks, and three new products: Neural Community Visualizer, Graphic Embeddings, and Activation Maps. As constantly, DataRobot can incorporate its products for distinct area kinds, so categorized illustrations or photos can insert precision to products that also use numeric, text, and geospatial information. For illustration, an picture of a kitchen area that is modern-day and spacious and has new-seeking, significant-conclusion appliances might end result in a household-pricing design increasing its estimate of the sale price.

There is no require to provision GPUs for Visible AI. In contrast to the course of action of teaching picture products from scratch, Visible AI’s pre-properly trained neural networks do the job great on CPUs, and don’t even take really prolonged.

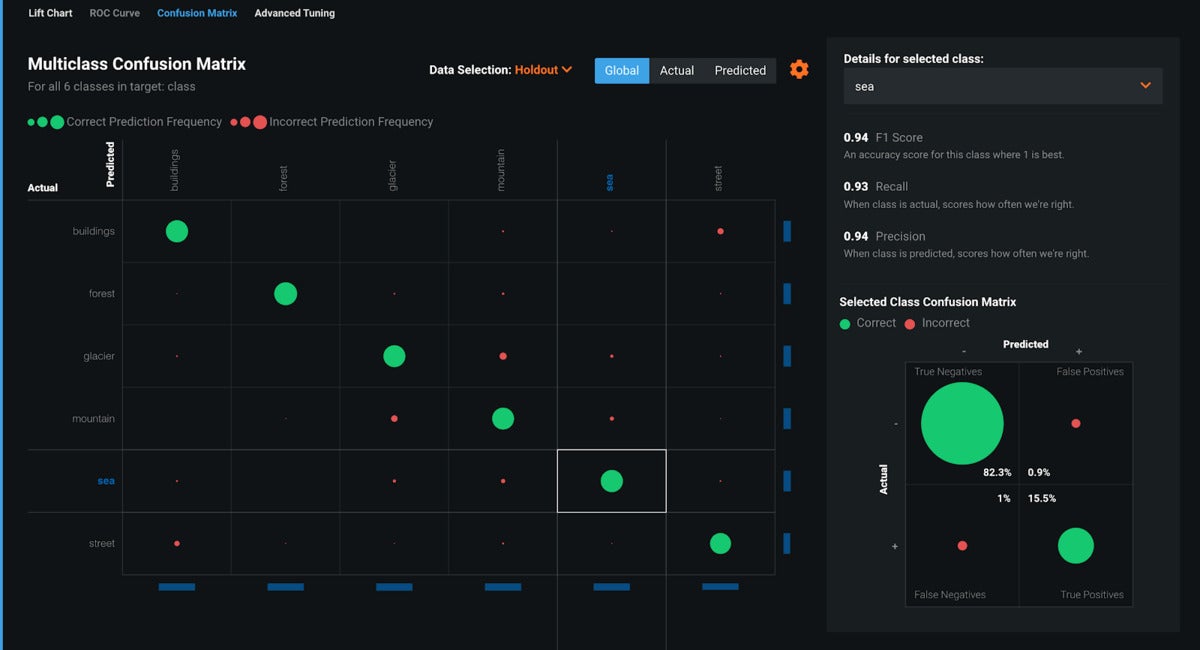

IDG

IDGThis multi-class confusion matrix for picture classification shows a rather cleanse separation, with most of the predictions accurate positives or accurate negatives.

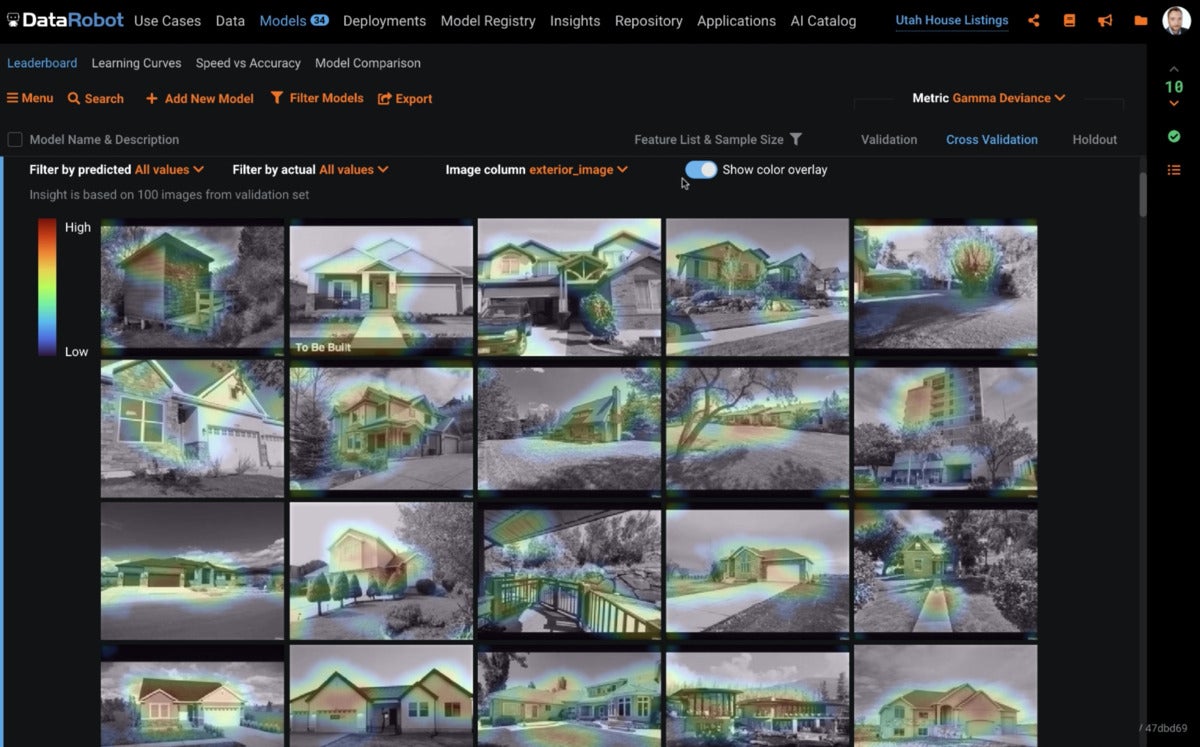

IDG

IDGThe coloration overlays in these household exterior illustrations or photos from the Residence Listings demo emphasize the options the design factored into its sale price predictions. These components were combined with other fields, this sort of as square footage and range of bedrooms.

DataRobot Trustworthy AI

It is quick for an AI design to go off monitor, and there are quite a few illustrations of what not to do in the literature. Contributing components include outliers in the teaching information, teaching information that is not consultant of the true distribution, options that are dependent on other options, much too a lot of lacking element values, and options that leak the target worth into the teaching.

DataRobot has guardrails to detect these situations. You can take care of them in the AutoML period, or ideally in the information prep period. Guardrails enable you have faith in the design a lot more, but they are not infallible.

Humble AI guidelines allow for DataRobot to detect out of range or uncertain predictions as they come about, as component of the MLOps deployment. For illustration, a household worth of $one hundred million in Cleveland is unheard-of a prediction in that range is most most likely a oversight. For a further illustration, a predicted probability of .5 may well point out uncertainty. There are three strategies of responding when humility guidelines fireplace: Do nothing but maintain monitor, so that you can later refine the design utilizing a lot more information override the prediction with a “safe” worth or return an mistake.

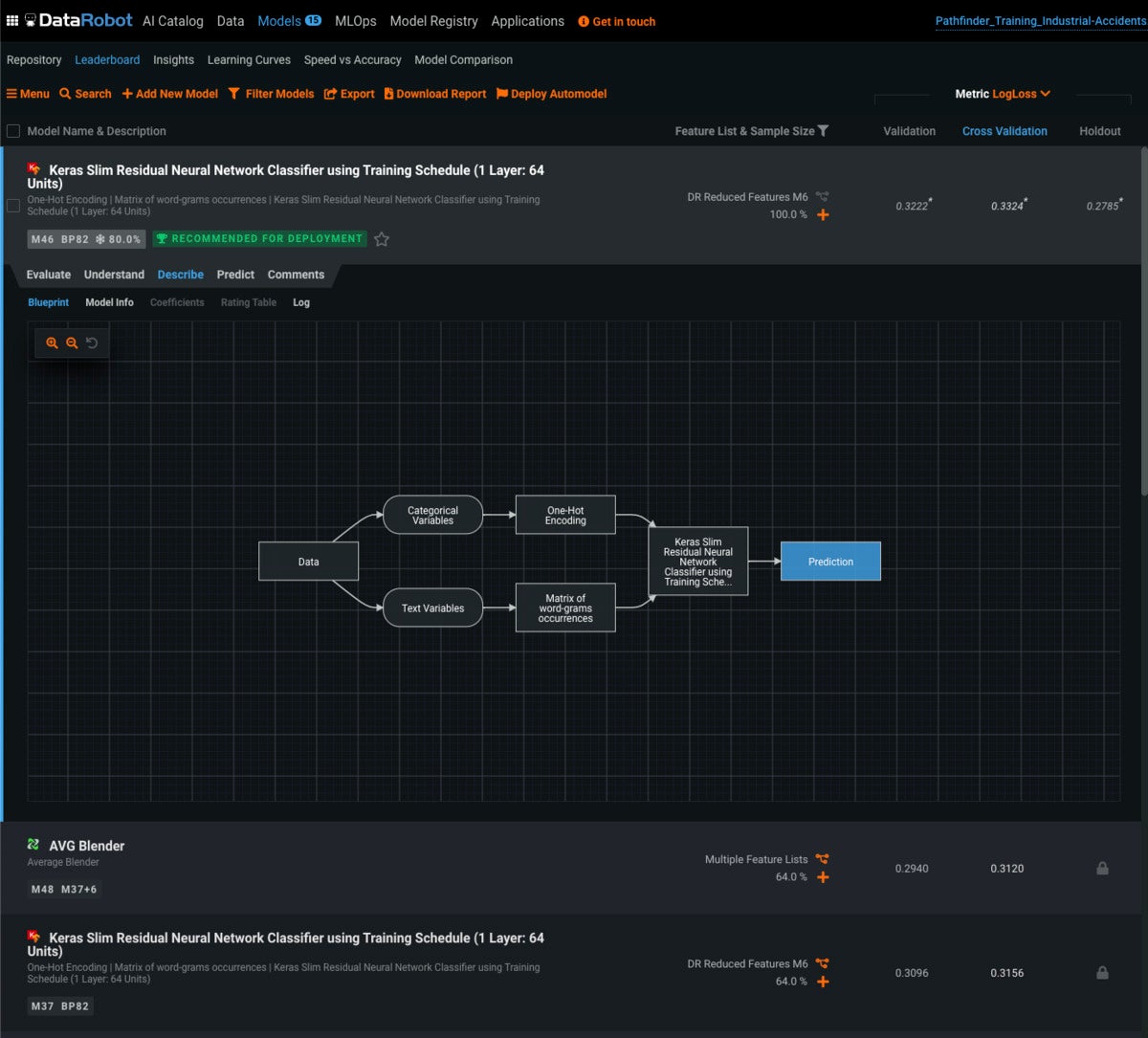

Also a lot of device learning products deficiency explainability they are nothing a lot more than black boxes. That is generally primarily accurate of AutoML. DataRobot, however, goes to wonderful lengths to make clear its products. The diagram that follows is rather easy, as neural community products go, but you can see the approach of processing text and categorical variables in different branches and then feeding the results into a neural community.

IDG

IDGBlueprint for an AutoML design. This design processes categorical variables utilizing a person-incredibly hot encoding and text variables utilizing phrase-grams, then components them all into a Keras Slender Residual neural community classifier. You can drill into any box to see the parameters and get a website link to the related documentation.

DataRobot MLOps

At the time you have constructed a very good design you can deploy it as a prediction service. That is not the conclusion of the story, however. Above time, situations modify. We can see an illustration in the graphs down below. Dependent on these results, some of the information that flows into the design — elementary university areas — demands to be up-to-date, and then the design demands to be retrained and redeployed.

IDG

IDGHunting at the element drift from MLOps tells you when situations modify that have an impact on the model’s predictions. Here we see that a new elementary university has opened, which ordinarily raises the worth of close by homes.

Overall, DataRobot now has an conclusion-to-conclusion AutoML suite that usually takes you from information gathering as a result of design creating to deployment, checking, and management. DataRobot has paid out interest to the pitfalls in AI design creating and presented strategies to mitigate a lot of of them. Overall, I level DataRobot really very good, and a deserving competitor to Google, AWS, Microsoft, and H2O.ai. I have not reviewed the device learning choices from IBM, MathWorks, or SAS just lately plenty of to level them.

I was surprised and impressed to explore that DataRobot can operate on CPUs with no accelerators and produce products in a handful of several hours, even when creating neural community products that include picture classification. That may well give it a slight edge over the three opponents I talked about for AutoML, mainly because GPUs and TPUs are not low cost.