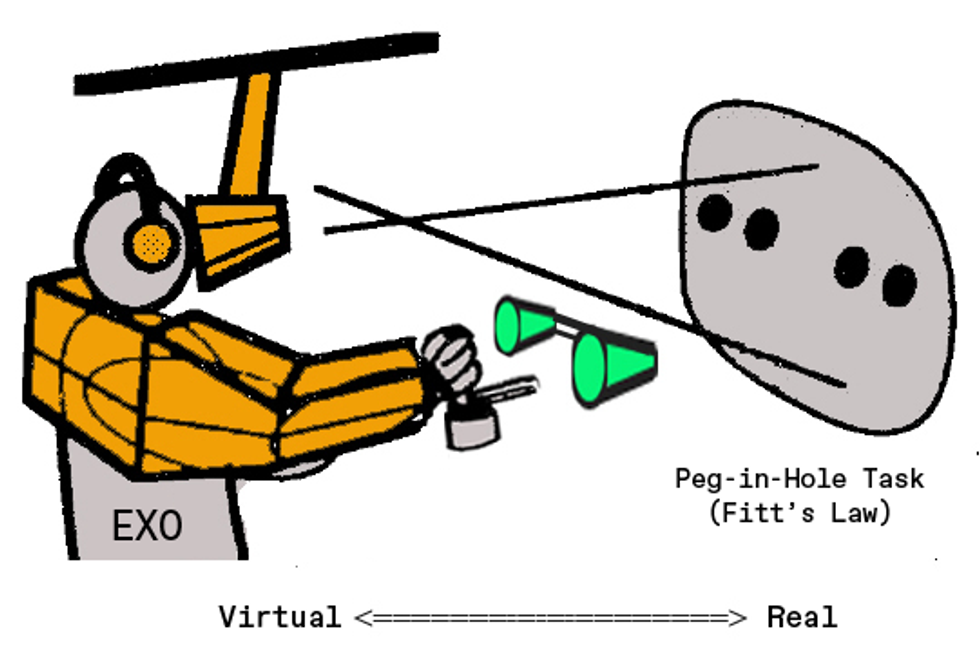

I climb into an higher-human body exoskeleton that’s lined in sensors, motors, gears, and bearings, and then lean ahead, tilting my head up to push my facial area from the eyepieces of a eyesight procedure hanging from the ceiling. In entrance of me, I see a huge wood board, painted black and punctuated by a grid of metal holes. The board is true. So is the peg in my hand that I’m attempting to move from 1 hole to one more, as quickly as I can. When I commence to go the peg, a digital cone seems more than the concentrate on gap, alongside with a virtual surface easing toward it. I can really feel the surface as I slide the peg along it towards the cone and into the gap.

This was the Virtual Fixtures system, which was produced in the early 1990s to take a look at the prospective of “perceptual overlays” to enhance human effectiveness in manual responsibilities that require dexterity. And it worked.

These days, digital-fact specialists glimpse again on the system as the initially interactive augmented-reality procedure that enabled people to interact at the same time with authentic and digital objects in a one immersive fact.

The challenge started in 1991, when I pitched the work as component of my doctoral investigation at Stanford University. By the time I finished—three several years and a number of prototypes later—the process I had assembled filled fifty percent a area and applied nearly a million dollars’ worthy of of components. And I had collected sufficient info from human tests to definitively exhibit that augmenting a actual workspace with virtual objects could significantly greatly enhance user functionality in precision responsibilities.

Offered the shorter time frame, it may sound like all went easily, but the undertaking arrived near to having derailed numerous times, thanks to a restricted spending budget and significant machines requires. In truth, the effort and hard work might have crashed early on, experienced a parachute—a genuine one particular, not a virtual one—not unsuccessful to open in the very clear blue skies around Dayton, Ohio, all through the summer of 1992.

Right before I demonstrate how a parachute incident served travel the progress of augmented reality, I’ll lay out a very little of the historic context.

Thirty a long time in the past, the field of virtual truth was in its infancy, the phrase itself having only been coined in 1987 by

Jaron Lanier, who was commercializing some of the initially headsets and gloves. His function designed on earlier investigate by Ivan Sutherland, who pioneered head-mounted exhibit technological know-how and head-tracking, two important elements that sparked the VR industry. Augmented truth (AR)—that is, combining the true earth and the virtual entire world into a solitary immersive and interactive reality—did not however exist in a meaningful way.

Again then, I was a graduate college student at Stanford University and a portion-time researcher at

NASA’s Ames Research Middle, fascinated in the generation of digital worlds. At Stanford, I labored in the Centre for Design Analysis, a team centered on the intersection of human beings and know-how that made some of the really early VR gloves, immersive vision techniques, and 3D audio programs. At NASA, I worked in the State-of-the-art Shows and Spatial Perception Laboratory of the Ames Study Middle, the place scientists were being checking out the fundamental parameters necessary to empower reasonable and immersive simulated worlds.

Of class, realizing how to produce a excellent VR expertise and being capable to produce it are not the same detail. The very best PCs on the current market back again then utilised Intel 486 processors managing at 33 megahertz. Modified for inflation, they value about US $8,000 and weren’t even a thousandth as rapidly as a low-priced gaming computer these days. The other solution was to invest $60,000 in a

Silicon Graphics workstation—still fewer than a hundredth as rapidly as a mediocre Laptop nowadays. So, however scientists doing the job in VR throughout the late 80s and early 90s have been doing groundbreaking operate, the crude graphics, bulky headsets, and lag so negative it designed folks dizzy or nauseous plagued the resulting virtual activities.

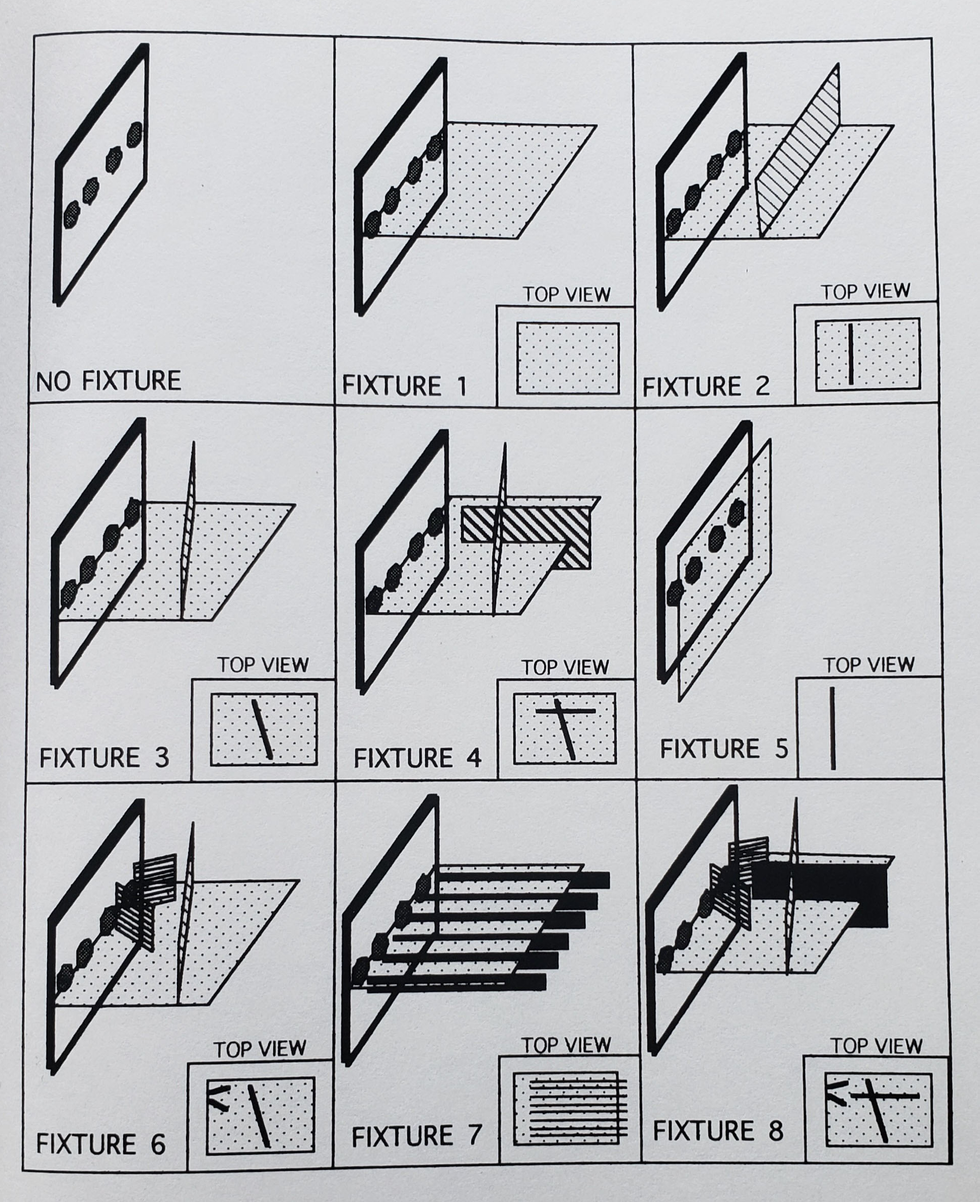

These early drawings of a actual pegboard mixed with digital overlays created by a computer—an early variation of augmented reality—were produced by Louis Rosenberg as section of his Virtual Fixtures challenge.Louis Rosenberg

I was conducting a exploration project at NASA to

optimize depth notion in early 3D-vision units, and I was just one of these folks receiving dizzy from the lag. And I observed that the photographs created back then were unquestionably virtual but much from reality.

Nonetheless, I was not discouraged by the dizziness or the very low fidelity, for the reason that I was certain the hardware would steadily strengthen. Rather, I was anxious about how enclosed and isolated the VR knowledge created me truly feel. I wished I could expand the technology, getting the power of VR and unleashing it into the serious world. I dreamed of making a merged actuality in which virtual objects inhabited your physical environment in these types of an authentic manner that they appeared like legitimate pieces of the globe about you, enabling you to reach out and interact as if they were being essentially there.

I was mindful of a person very basic sort of merged reality—the head-up display— in use by military services pilots, enabling flight information to look in their lines of sight so they did not have to look down at cockpit gauges. I hadn’t knowledgeable such a display myself, but became acquainted with them thanks to a several blockbuster 1980s strike videos, together with

Best Gun and Terminator. In Major Gun a glowing crosshair appeared on a glass panel in entrance of the pilot in the course of dogfights in Terminator, crosshairs joined textual content and numerical knowledge as section of the fictional cyborg’s look at of the entire world all-around it.

Neither of these merged realities were the slightest little bit immersive, presenting photos on a flat plane rather than related to the true environment in 3D house. But they hinted at appealing opportunities. I believed I could transfer far outside of uncomplicated crosshairs and textual content on a flat plane to generate virtual objects that could be spatially registered to authentic objects in an everyday natural environment. And I hoped to instill these virtual objects with practical physical attributes.

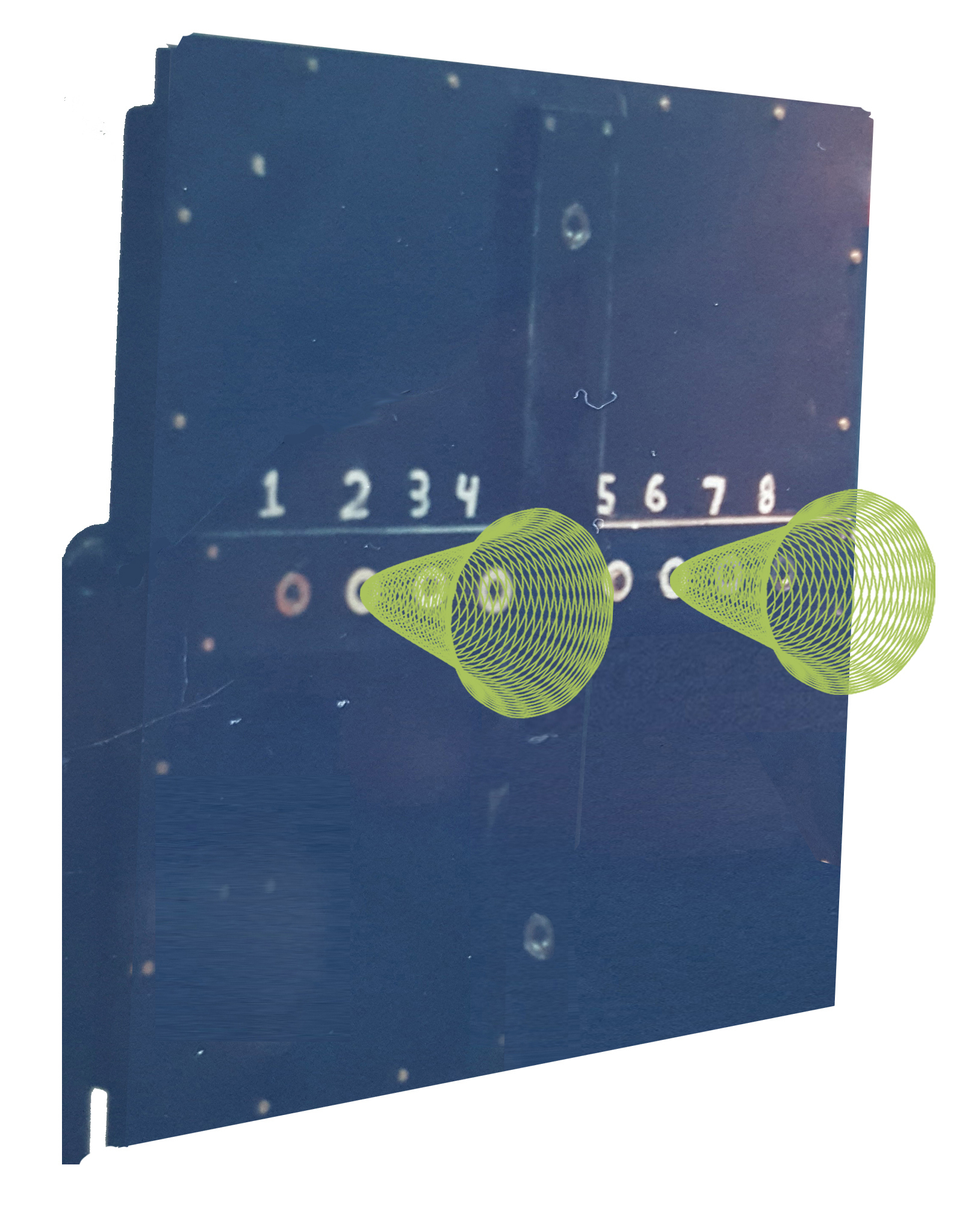

The Fitts’s Law peg-insertion task includes getting check topics promptly shift steel pegs among holes. The board proven listed here was actual, the cones that helped tutorial the person to the right holes virtual.Louis Rosenberg

I essential sizeable resources—beyond what I had accessibility to at Stanford and NASA—to go after this eyesight. So I pitched the strategy to the Human Sensory Comments Team of the U.S. Air Force’s Armstrong Laboratory, now part of the

Air Drive Research Laboratory.

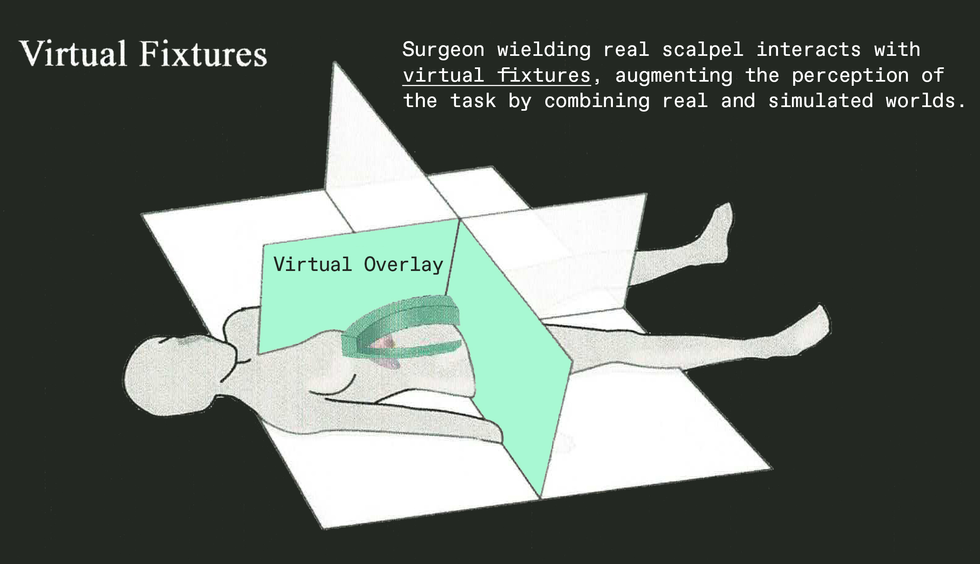

To make clear the simple benefit of merging authentic and virtual worlds, I applied the analogy of a basic metal ruler. If you want to draw a straight line in the authentic planet, you can do it freehand, likely slow and utilizing important psychological energy, and it nevertheless won’t be notably straight. Or you can seize a ruler and do it substantially quicker with considerably significantly less mental hard work. Now picture that instead of a true ruler, you could grab a digital ruler and make it instantly seem in the serious earth, beautifully registered to your real environment. And picture that this digital ruler feels physically authentic—so much so that you can use it to guideline your authentic pencil. Simply because it is virtual, it can be any form and sizing, with appealing and handy houses that you could hardly ever accomplish with a metal straightedge.

Of training course, the ruler was just an analogy. The programs I pitched to the Air Pressure ranged from augmented manufacturing to surgical procedures. For instance, take into consideration a surgeon who wants to make a harmful incision. She could use a cumbersome metal fixture to steady her hand and stay clear of vital organs. Or we could invent a little something new to augment the surgery—a virtual fixture to guidebook her real scalpel, not just visually but bodily. For the reason that it is digital, these kinds of a fixture would move correct via the patient’s overall body, sinking into tissue just before a one reduce had been created. That was the concept that obtained the military excited, and their desire wasn’t just for in-particular person tasks like surgery but for distant responsibilities carried out applying remotely managed robots. For illustration, a technician on Earth could repair a satellite by controlling a robotic remotely, assisted by virtual fixtures included to movie photographs of the actual worksite. The Air Force agreed to give ample funding to go over my bills at Stanford together with a compact funds for gear. Possibly far more noticeably, I also acquired obtain to desktops and other devices at

Wright-Patterson Air Drive Foundation around Dayton, Ohio.

And what turned known as the Digital Fixtures Job arrived to lifetime, operating towards creating a prototype that could be rigorously analyzed with human topics. And I grew to become a roving researcher, producing core concepts at Stanford, fleshing out some of the fundamental systems at NASA Ames, and assembling the full program at Wright-Patterson.

In this sketch of his augmented-truth procedure, Louis Rosenberg exhibits a user of the Virtual Fixtures system carrying a partial exoskeleton and peering at a genuine pegboard augmented with cone-formed virtual fixtures.Louis Rosenberg

Now about individuals parachutes.

As a youthful researcher in my early twenties, I was keen to master about the lots of jobs likely on all over me at these many laboratories. 1 exertion I adopted intently at Wright-Patterson was a project coming up with new parachutes. As you may well be expecting, when the exploration crew came up with a new style, they didn’t just strap a person in and exam it. Alternatively, they attached the parachutes to dummy rigs fitted with sensors and instrumentation. Two engineers would go up in an airplane with the hardware, dropping rigs and jumping together with so they could observe how the chutes unfolded. Stick with my story and you will see how this turned vital to the development of that early AR method.

Back at the Virtual Fixtures energy, I aimed to show the simple concept—that a genuine workspace could be augmented with virtual objects that come to feel so serious, they could help people as they done dexterous guide responsibilities. To test the thought, I wasn’t going to have consumers complete surgery or repair service satellites. Instead, I essential a straightforward repeatable endeavor to quantify manual functionality. The Air Drive previously had a standardized endeavor it had used for decades to exam human dexterity less than a wide variety of psychological and physical stresses. It is named the

Fitts’s Regulation peg-insertion undertaking, and it includes possessing check topics promptly go steel pegs among holes on a substantial pegboard.

So I commenced assembling a method that would help virtual fixtures to be merged with a real pegboard, producing a mixed-fact experience flawlessly registered in 3D place. I aimed to make these virtual objects come to feel so real that bumping the real peg into a digital fixture would experience as authentic as bumping into the real board.

I wrote application to simulate a huge selection of virtual fixtures, from very simple surfaces that prevented your hand from overshooting a focus on gap, to carefully formed cones that could support a person information the authentic peg into the actual hole. I produced virtual overlays that simulated textures and had corresponding sounds, even overlays that simulated pushing by means of a thick liquid as it it were being digital honey.

One particular imagined use for augmented reality at the time of its development was in surgical procedure. Today, augmented actuality is used for surgical teaching, and surgeons are commencing to use it in the running area.Louis Rosenberg

For extra realism, I modeled the physics of each individual digital ingredient, registering its area accurately in three dimensions so it lined up with the user’s notion of the real wooden board. Then, when the consumer moved a hand into an place corresponding to a virtual surface area, motors in the exoskeleton would bodily drive back, an interface technologies now usually termed “haptics.” It certainly felt so authentic that you could slide along the edge of a virtual surface area the way you could transfer a pencil in opposition to a serious ruler.

To precisely align these digital components with the real pegboard, I desired higher-quality online video cameras. Movie cameras at the time ended up much additional expensive than they are currently, and I had no revenue still left in my spending plan to obtain them. This was a frustrating barrier: The Air Power experienced given me entry to a wide selection of incredible components, but when it arrived to easy cameras, they could not enable. It appeared like each and every exploration task needed them, most of significantly increased precedence than mine.

Which brings me again to the skydiving engineers testing experimental parachutes. These engineers came into the lab a single day to chat they described that their chute had unsuccessful to open, their dummy rig plummeting to the ground and destroying all the sensors and cameras aboard.

This appeared like it would be a setback for my challenge as very well, since I realized if there had been any added cameras in the building, the engineers would get them.

But then I requested if I could just take a glimpse at the wreckage from their failed check. It was a mangled mess of bent metal, dangling circuits, and smashed cameras. Even now, however the cameras looked dreadful with cracked circumstances and broken lenses, I wondered if I could get any of them to function nicely ample for my desires.

By some wonder, I was in a position to piece collectively two working units from the six that had plummeted to the floor. And so, the first human screening of an interactive augmented-actuality technique was produced feasible by cameras that experienced pretty much fallen out of the sky and smashed into the earth.

To recognize how vital these cameras were to the system, think of a straightforward AR software right now, like

Pokémon Go. If you did not have a digital camera on the again of your cellphone to seize and show the true environment in real time, it would not be an augmented-truth knowledge it would just be a regular video clip game.

The same was real for the Digital Fixtures program. But thanks to the cameras from that unsuccessful parachute rig, I was equipped to produce a blended actuality with correct spatial registration, offering an immersive working experience in which you could access out and interact with the authentic and digital environments simultaneously.

As for the experimental component of the venture, I conducted a collection of human research in which consumers professional a wide range of virtual fixtures overlaid on to their notion of the genuine undertaking board. The most practical fixtures turned out to be cones and surfaces that could guide the user’s hand as they aimed the peg towards a gap. The most efficient involved bodily encounters that couldn’t be simply manufactured in the authentic environment but were being conveniently achievable pretty much. For illustration, I coded digital surfaces that had been “magnetically attractive” to the peg. For the people, it felt as if the peg experienced snapped to the surface. Then they could glide along it right up until they selected to yank free of charge with one more snap. Such fixtures enhanced pace and dexterity in the trials by more than 100 per cent.

Of the various purposes for Virtual Fixtures that we thought of at the time, the most commercially feasible back then included manually controlling robots in distant or dangerous environments—for instance, during dangerous squander cleanse-up. If the communications distance launched a time hold off in the telerobotic handle, virtual fixtures

became even additional useful for improving human dexterity.

Today, researchers are nonetheless checking out the use of digital fixtures for telerobotic apps with fantastic achievements, together with for use in

satellite restore and robot-assisted surgery.

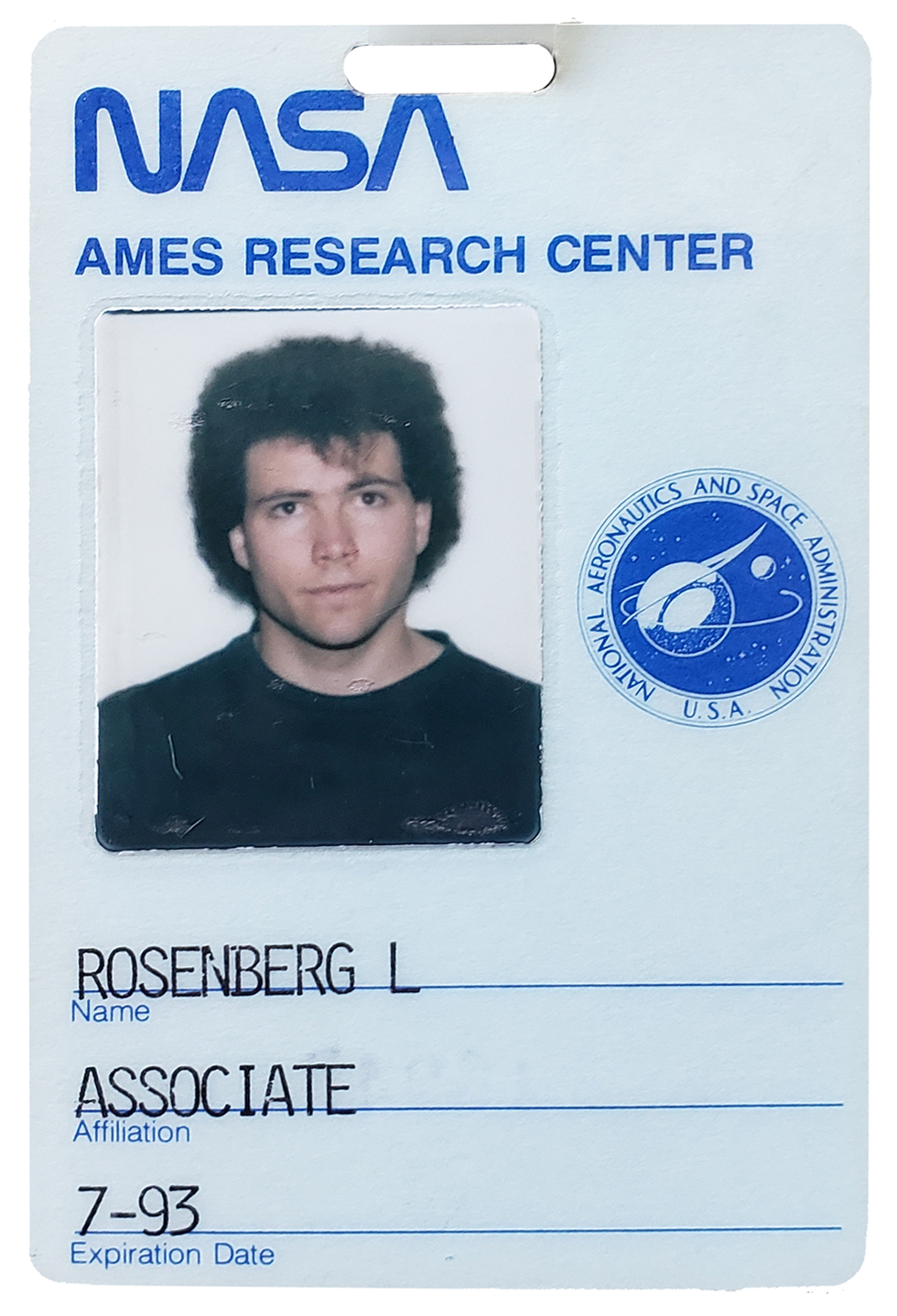

Louis Rosenberg spent some of his time functioning in the Sophisticated Shows and Spatial Perception Laboratory of the Ames Investigate Center as section of his research in augmented reality.Louis Rosenberg

I went in a diverse way, pushing for more mainstream purposes for augmented truth. That’s due to the fact the part of the Virtual Fixtures challenge that had the biggest impression on me personally wasn’t the improved effectiveness in the peg-insertion task. As an alternative, it was the huge smiles that lit up the faces of the human topics when they climbed out of the technique and effused about what a exceptional working experience they experienced experienced. Many advised me, without prompting, that this sort of technologies would 1 day be just about everywhere.

And without a doubt, I agreed with them. I was certain we’d see this kind of immersive technological innovation go mainstream by the end of the 1990s. In actuality, I was so impressed by the enthusiastic reactions people had when they experimented with those people early prototypes, I started a company in 1993—Immersion—with the target of pursuing mainstream client purposes. Of study course, it has not occurred practically that fast.

At the threat of getting improper yet again, I sincerely feel that virtual and augmented fact, now frequently referred to as the metaverse, will grow to be an essential portion of most people’s lives by the close of the 2020s. In truth, based mostly on the new surge of expense by major companies into enhancing the technology, I forecast that by the early 2030s augmented reality will replace the cellular telephone as our primary interface to digital articles.

And no, none of the check topics who expert that early glimpse of augmented fact 30 many years in the past knew they had been making use of hardware that experienced fallen out of an airplane. But they did know that they were being amid the to start with to arrive at out and contact our augmented foreseeable future.

From Your Internet site Posts

Similar Content All over the World wide web