As data projects built in the Cloud are becoming more and more frequent, a common use case is to interact with Cloud storage from an existing on premise Big Data platform. Microsoft Azure recently introduced Data Lake Storage Gen2, that is built on top of Azure Blob and offers HDFS-like management of data on Azure. Because it is a quite a new product (GA on Feb. 2019), connecting to ADLS Gen2 from HDP and HDF is not yet supported in public releases.

In this article, we will see how to write data to ADLS Gen2 using:

Getting access to ADLS Gen2

Azure Data Lake Storage Gen2 supports the same authorization options than Azure Blob storage. In this article, we will access ADLS using a service principal, which leaves us with 3 options:

Creating a service principal in Azure AD

First, we need to create a service principal in our Azure tenant. In fact, we will create an application object, that will have one service principal for each tenant on which it is used:

- Open the Azure Portal

- Go to the Azure Active Directory panel (you can find it using the search bar)

- Go to App registrations → New registration

- Just fill in the

Namefield (e.g.hdp-hdf-adls-app) and clickRegister

- Once the application is created, it appears in your “Owned applications”

- To be able to authenticate as the service principal, we will need a secret

- Go to your-app → Certificates & secrets

- Generate a new client secret and save it for later

Giving access to an ADLS storage account

In this article, we will give full access to the storage account to the service principal (for fine grained access see Manage access rights with RBAC):

- Go to your storage account page → Access Control (IAM)

- Assigne the role

Storage Blob Data Contributorto your application

Copying data from HDP using DistCp

Connecting to ADLS Gen2 from Hadoop is only supported since Hadoop 3.2.0. Therefore it is not possible on any of the public HDP releases that only carry Hadoop up to version 3.1.1 without the feature backport.

Gathering needed binaries

To write data to ADLS Gen2, we will need to use:

- The Hadoop 3.3.0 binaries for the Hadoop CLI and MapReduce2

- The

hadoop-azurejar - The

wildfly-openssljar for secured connection to ADLS

Use these commands to download and upload the hadoop binaries to HDFS (requires jq package):

mkdir distcp-to-adls

cd !$

user=$USER

hadoop_version=3.3.0

hadoop_azure_jar="https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-azure/$hadoop_version/hadoop-azure-$hadoop_version.jar"

wildfly_openssl_jar=https://repo1.maven.org/maven2/org/wildfly/openssl/wildfly-openssl/1.0.7.Final/wildfly-openssl-1.0.7.Final.jar

hdfs_dir="/user/$user/.distcp-adls"

hdfs dfs -mkdir -p "$hdfs_dir"

hdfs dfs -rm -r -f "$hdfs_dir/*"

hadoop_path="hadoop/common/hadoop-$hadoop_version/hadoop-$hadoop_version.tar.gz"

hadoop_mirror=$(curl "https://www.apache.org/dyn/closer.cgi/$hadoop_path&asjson=1" | jq -r '.preferred')

hadoop_url="$hadoop_mirror$hadoop_path"

mkdir lib

wget -P lib "$hadoop_url" && wget -P lib "$hadoop_azure_jar" && wget -P lib "$wildfly_openssl_jar"

cd lib && tar -xzf "hadoop-$hadoop_version.tar.gz"

mv "hadoop-$hadoop_version" hadoop

tar -czf mapreduce.tar.gz hadoop && cd ..

hdfs dfs -put lib/mapreduce.tar.gz "$hdfs_dir"

mkdir hadoop-conf

cp /etc/hadoop/conf/*-site.xml hadoop-confIf everything worked fine, you have:

- All the binaries in

./lib:ls -1 ./lib hadoop hadoop-3.3.0.tar.gz hadoop-azure-3.3.0.jar mapreduce.tar.gz wildfly-openssl-1.0.7.Final.jar - The Hadoop binaries as

mapreduce.tar.gzin HDFS at/user/$user/.distcp-adls:hdfs dfs -ls /user/leonardg/.distcp-adls Found 1 items -rw-r--r-- 3 leonardg users 500735363 2020-09-24 19:14 /user/leonardg/.distcp-adls/mapreduce.tar.gz

Storing credentials in a Hadoop CredentialProvider

In order not to store our service principal credentials in plain text, let’s use a Hadoop CredentialProvider in which we will store the OAuth endpoint, the application (client) ID and the application secret:

tenant=mycompany.onmicrosoft.com

app_id=MY_APP_ID

read -s app_secret

cred_provider="$hdfs_dir/adls2keyfile.jceks"

oauth_endpoint="https://login.microsoftonline.com/$tenant/oauth2/token"

hdfs dfs -rm -f "$cred_provider"

hadoop credential create fs.azure.account.oauth2.client.endpoint \

-provider "jceks://hdfs$cred_provider" -value "$oauth_endpoint"

hadoop credential create fs.azure.account.oauth2.client.id \

-provider "jceks://hdfs$cred_provider" -value "$app_id"

hadoop credential create fs.azure.account.oauth2.client.secret \

-provider "jceks://hdfs$cred_provider" -value "$app_secret"If everything worked fine you should see 3 properties in your CredentialProvider:

hadoop credential list -provider "jceks://hdfs$cred_provider"

Listing aliases for CredentialProvider: jceks://hdfs/user/leonardg/.distcp-adls/adls2keyfile.jceks

fs.azure.account.oauth2.client.id

fs.azure.account.oauth2.client.endpoint

fs.azure.account.oauth2.client.secretCopying files with DistCp

Now that everything is setup, we can copy files from HDFS to ADLS with DistCp:

user=$USER

hdfs_dir="/user/$user/.distcp-adls"

hdfs_cp_dir=/path/to/dir/in/hdfs

storage_account=ADLS_STORAGE_ACCOUNT

container=ADLS_CONTAINER

adls_cp_dir=path/to/copy/dir

adls_cp_url="abfss://$container@$storage_account.dfs.core.windows.net/$adls_cp_dir"

lib/hadoop/bin/hadoop \

--config "./hadoop-conf" \

distcp \

-Dfs.azure.account.auth.type=OAuth \

-Dfs.azure.account.oauth.provider.type=org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider \

-Dhdp.version="$hdp_version" \

-Dmapreduce.application.framework.path="$hdfs_dir/mapreduce.tar.gz#mr-framework" \

-Dhadoop.security.credential.provider.path="jceks://hdfs$hdfs_dir/adls2keyfile.jceks" \

-libjars "./lib/hadoop-azure-3.3.0.jar,./lib/wildfly-openssl-1.0.7.Final.jar" \

"$hdfs_cp_dir" "$adls_cp_url"Writing data with NiFi

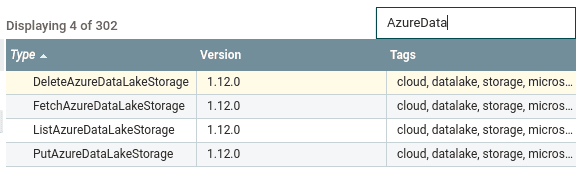

Native ADLS Gen2 processors were introduced in NiFi 1.12.0 (NIFI-7103). While is was possible to interact with ADLS Gen2 using the HDFS processors, this implies storing the storage account access key in plain text in the HDFS core-site.xml what is obviously not recommended. Here we will focus on using the new native processors.

Import needed processors

First, we need to make the new processors available to our NiFi instance (note: you don’t have to upgrade your whole NiFi cluster, the following procedure was tested on NiFi 1.9.0):

- Download NiFi 1.12.0 binaries from the official website

- Extract the archive. The 2 NARs we are interested in are

nifi-azure-nar-1.12.0.narandnifi-azure-services-api-nar-1.12.0.nar. You will find them in thelibfolder. - On all your NiFi nodes:

- Locate the NiFi home (e.g.

/usr/hdf/current/nifion HDF) - Look for existing Azure NARs in the

libfolder:ls -l /usr/hdf/current/nifi/lib | grep azure nifi-azure-nar-1.9.0.3.4.1.1-4.nar - Remove the existing Azure NARs (make a backup)

- Copy the 2 NiFI 1.12.0 Azure NARs mentionned in step 2. to the

libfolder - Make sure that they are owned by the

nifiuser:chown nifi:nifi /usr/hdf/current/nifi/lib/nifi-azure-*

- Locate the NiFi home (e.g.

- Restart the NiFi cluster (via Ambari on HDF)

- Check that the new Azure processors are available (e.g. PutAzureDataLakeStorage):

Generating a Shared Access Signature

A ADLSCredentialsControllerService is used by NiFi to store the credentials to access ADLS. It takes either an account key or a SAS token to authenticate. Even if both crendential types are encrypted by NiFi, we will use the SAS method which is recommended by Microsoft.

Let’s generate a user delegation SAS for our ADLS container. A user delegation SAS uses an Active Directory account to sign the SAS (instead of an account key for account/service SAS). It allows permissions double check on access:

- Check of the access permissions of the user that signed the SAS

- Check of the access permissions granted by the SAS (see Specify permissions)

The easiest way to generate a SAS is using Azure CLI:

-

Install Azure CLI

-

Login to the CLI using the service principal created in the article’s first section (password = secret)

az login --service-principal -u SP_ID --tenant mycompany.onmicrosoft.com Password: -

Use the

az storage container generate-sascommand (adjust permissions):storage_account=ADLS_STORAGE_ACCOUNT container=ADLS_CONTAINER az storage container generate-sas -n "$container" \ --account-name "$storage_account" \ --subscription "MySubscription" \ --permission dlrw \ --expiry 2020-09-25T00:00:00Z \ --as-user --auth-mode login -

Save the generated SAS

Note: the maximum duration of a user delegation SAS is 7 days, so you will have to automate its renewal somehow, or use a service SAS which can have longer lifetime.

Configure the dataflow

We can now interact with ADLS Gen2 from a NiFi dataflow:

- Create a ADLSCredentialsControllerService

- Fill in the properties:

- Storage Account Name

- SAS Token: Add a

?before the SAS generated above. E.g.?se=2020-11-10T00%3A00%3A00Z&sp=rwdl&sv=2018-11-09&sr=...

- Create whatever processor you need to access ADLS Gen2, using the

ADLSCredentialsControllerServicecreated above

Alternative solutions

Azure Data Factory

An alternative solution to copy data from an on-premise Hadoop cluster to Azure is to use Azure Data Factory, that offers a bunch of connectors:

In addition Data Factory lets you create a self-hosted integration runtime inside your on-premise private network.

All the setup is well explained on Azure documentation so we won’t talk more about it here!

Recap

In this article we have seen that even if is not natively supported, we can easily use later versions of a few components to interact with ADLS Gen2 from both HDP and HDF platforms.