These times, virtual-truth experts look back again on the system as the very first interactive augmented-reality system that enabled buyers to interact simultaneously with actual and digital objects in a solitary immersive truth.

The job began in 1991, when I pitched the exertion as part of my doctoral research at Stanford University. By the time I finished—three many years and many prototypes later—the program I experienced assembled filled 50 percent a place and made use of almost a million dollars’ worth of hardware. And I experienced collected plenty of facts from human testing to definitively present that augmenting a real workspace with virtual objects could drastically enhance user performance in precision responsibilities.

Given the shorter time body, it could possibly seem like all went smoothly, but the job arrived shut to obtaining derailed a lot of periods, many thanks to a limited budget and substantial gear desires. In reality, the effort could possibly have crashed early on, experienced a parachute—a true a single, not a digital one—not unsuccessful to open in the obvious blue skies about Dayton, Ohio, for the duration of the summer season of 1992.

Prior to I make clear how a parachute incident served generate the enhancement of augmented fact, I’ll lay out a minor of the historical context.

30 years ago, the discipline of virtual truth was in its infancy, the phrase by itself possessing only been coined in 1987 by

Jaron Lanier, who was commercializing some of the initially headsets and gloves. His get the job done constructed on earlier study by Ivan Sutherland, who pioneered head-mounted screen technological innovation and head-monitoring, two essential factors that sparked the VR discipline. Augmented truth (AR)—that is, combining the authentic entire world and the digital planet into a one immersive and interactive reality—did not nonetheless exist in a significant way.

Back then, I was a graduate university student at Stanford College and a element-time researcher at

NASA’s Ames Investigate Middle, intrigued in the development of digital worlds. At Stanford, I worked in the Center for Structure Investigate, a group focused on the intersection of people and technology that created some of the pretty early VR gloves, immersive eyesight systems, and 3D audio units. At NASA, I labored in the Superior Shows and Spatial Notion Laboratory of the Ames Research Centre, exactly where scientists ended up checking out the elementary parameters expected to empower real looking and immersive simulated worlds.

Of program, recognizing how to develop a top quality VR knowledge and currently being able to develop it are not the very same detail. The best PCs on the marketplace back then employed Intel 486 processors jogging at 33 megahertz. Altered for inflation, they value about US $8,000 and weren’t even a thousandth as fast as a cheap gaming computer nowadays. The other solution was to commit $60,000 in a

Silicon Graphics workstation—still less than a hundredth as rapidly as a mediocre Personal computer today. So, even though researchers operating in VR in the course of the late 80s and early 90s were undertaking groundbreaking work, the crude graphics, cumbersome headsets, and lag so bad it built persons dizzy or nauseous plagued the resulting digital experiences.

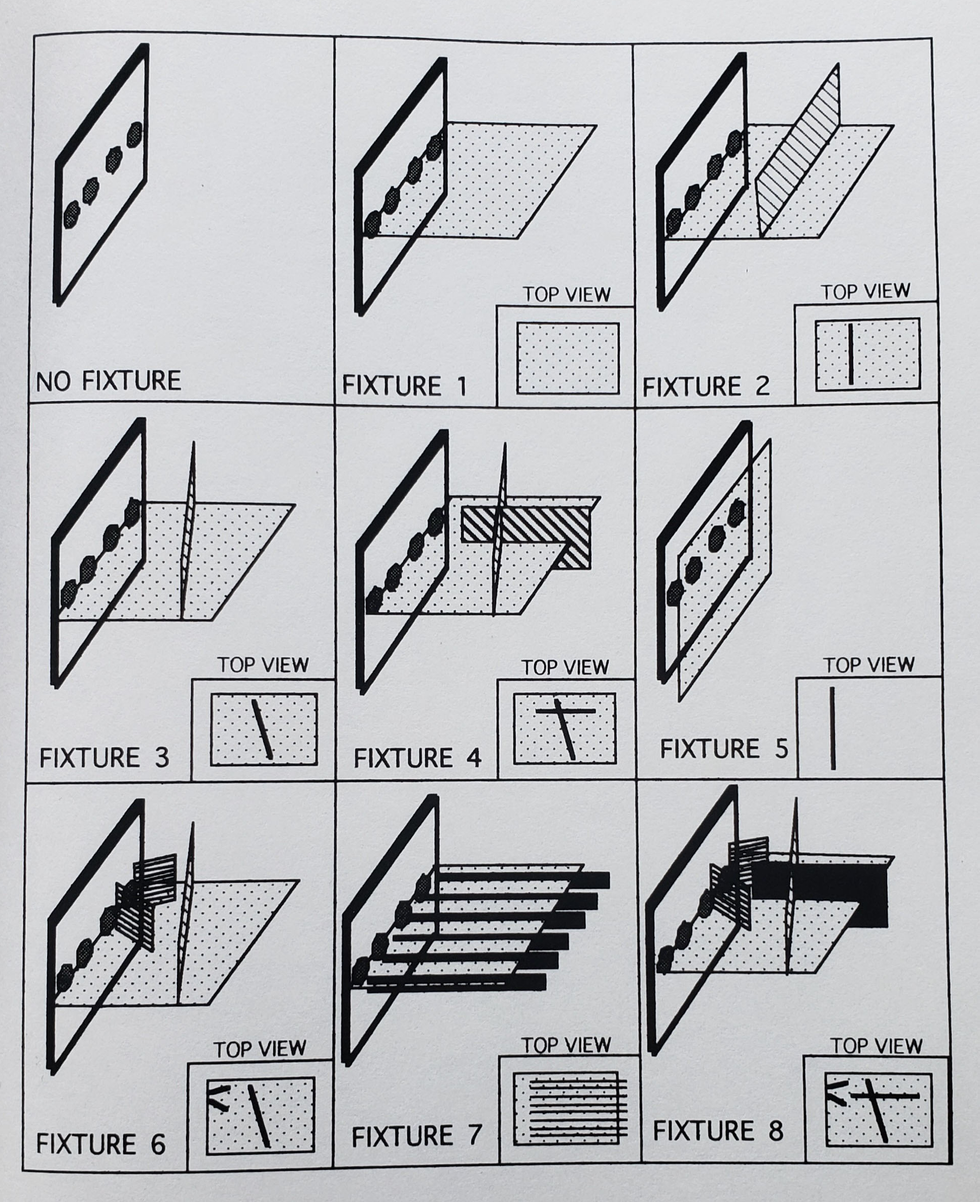

These early drawings of a real pegboard merged with virtual overlays produced by a computer—an early version of augmented reality—were developed by Louis Rosenberg as part of his Digital Fixtures undertaking.Louis Rosenberg

I was conducting a investigation undertaking at NASA to

enhance depth notion in early 3D-eyesight methods, and I was one of those people individuals receiving dizzy from the lag. And I observed that the pictures produced back then were certainly virtual but far from reality.

However, I was not discouraged by the dizziness or the minimal fidelity, for the reason that I was positive the components would steadily enhance. As an alternative, I was anxious about how enclosed and isolated the VR practical experience designed me really feel. I wished I could increase the technological innovation, getting the energy of VR and unleashing it into the true globe. I dreamed of building a merged fact where by digital objects inhabited your actual physical environment in these types of an authentic way that they seemed like authentic areas of the planet about you, enabling you to get to out and interact as if they were in fact there.

I was aware of a person extremely simple kind of merged reality—the head-up display— in use by army pilots, enabling flight information to look in their traces of sight so they didn’t have to glance down at cockpit gauges. I hadn’t experienced these types of a display myself, but became acquainted with them many thanks to a couple of blockbuster 1980s strike films, including

Best Gun and Terminator. In Top rated Gun a glowing crosshair appeared on a glass panel in front of the pilot during dogfights in Terminator, crosshairs joined textual content and numerical data as section of the fictional cyborg’s see of the earth about it.

Neither of these merged realities had been the slightest bit immersive, presenting illustrations or photos on a flat plane fairly than connected to the true environment in 3D house. But they hinted at interesting choices. I believed I could move far beyond straightforward crosshairs and text on a flat airplane to build digital objects that could be spatially registered to authentic objects in an common atmosphere. And I hoped to instill people virtual objects with reasonable physical properties.

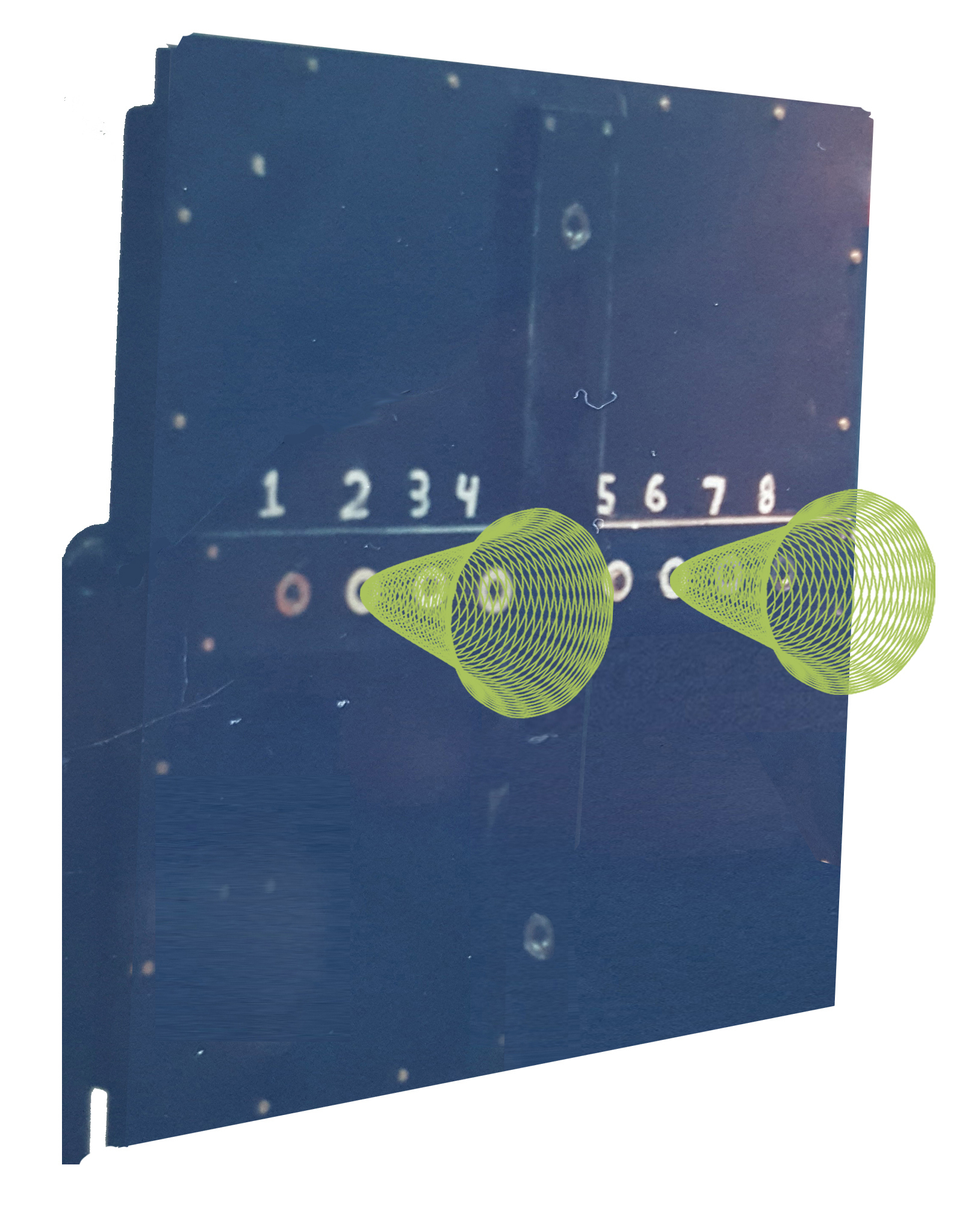

The Fitts’s Law peg-insertion activity consists of acquiring check subjects swiftly go metal pegs involving holes. The board demonstrated here was serious, the cones that assisted tutorial the person to the accurate holes digital.Louis Rosenberg

I necessary considerable resources—beyond what I had obtain to at Stanford and NASA—to go after this vision. So I pitched the thought to the Human Sensory Feed-back Team of the U.S. Air Force’s Armstrong Laboratory, now section of the

Air Force Investigate Laboratory.

To describe the useful benefit of merging authentic and digital worlds, I utilized the analogy of a easy metallic ruler. If you want to attract a straight line in the authentic planet, you can do it freehand, heading slow and using significant psychological energy, and it nevertheless won’t be specifically straight. Or you can grab a ruler and do it significantly faster with much considerably less psychological work. Now imagine that in its place of a actual ruler, you could seize a virtual ruler and make it instantly seem in the real entire world, flawlessly registered to your true environment. And visualize that this virtual ruler feels bodily authentic—so a great deal so that you can use it to guideline your true pencil. Because it’s digital, it can be any shape and measurement, with interesting and beneficial qualities that you could never realize with a metallic straightedge.

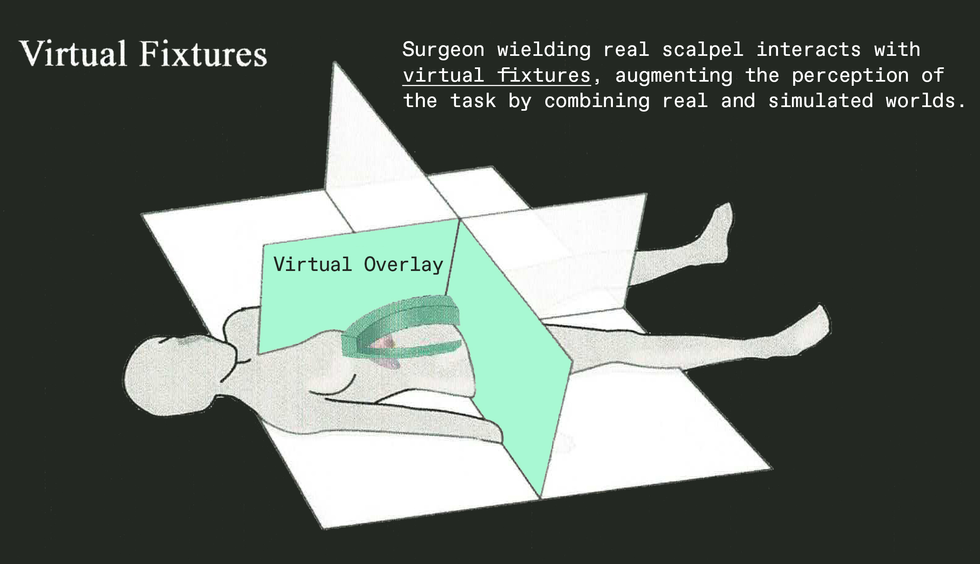

Of study course, the ruler was just an analogy. The purposes I pitched to the Air Power ranged from augmented producing to operation. For instance, think about a surgeon who wants to make a harmful incision. She could use a bulky metal fixture to regular her hand and stay clear of crucial organs. Or we could invent something new to augment the surgery—a digital fixture to tutorial her genuine scalpel, not just visually but physically. Because it’s virtual, such a fixture would pass suitable through the patient’s entire body, sinking into tissue prior to a one slice experienced been produced. That was the principle that acquired the armed forces enthusiastic, and their curiosity was not just for in-individual duties like surgical procedures but for distant tasks carried out making use of remotely controlled robots. For example, a technician on Earth could fix a satellite by managing a robot remotely, assisted by digital fixtures included to video clip pictures of the true worksite. The Air Power agreed to supply sufficient funding to address my expenditures at Stanford together with a compact funds for gear. Most likely more drastically, I also bought accessibility to personal computers and other products at

Wright-Patterson Air Pressure Base around Dayton, Ohio.

And what grew to become acknowledged as the Digital Fixtures Venture arrived to daily life, working toward creating a prototype that could be rigorously examined with human subjects. And I became a roving researcher, producing core ideas at Stanford, fleshing out some of the fundamental systems at NASA Ames, and assembling the total process at Wright-Patterson.

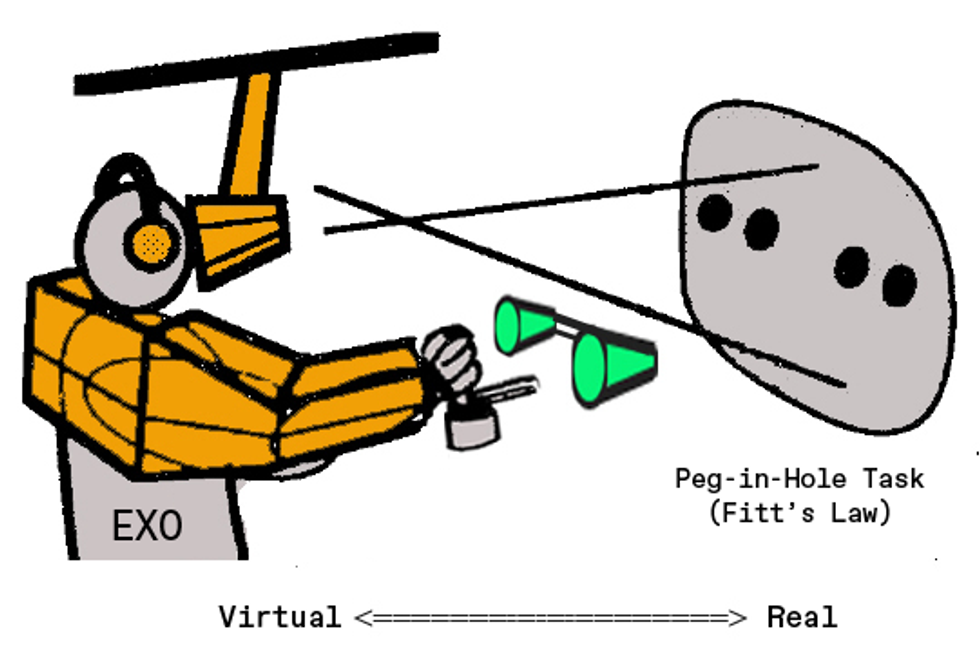

In this sketch of his augmented-fact system, Louis Rosenberg displays a person of the Digital Fixtures platform sporting a partial exoskeleton and peering at a true pegboard augmented with cone-formed virtual fixtures.Louis Rosenberg

Now about all those parachutes.

As a young researcher in my early twenties, I was eager to learn about the quite a few assignments going on about me at these different laboratories. One particular exertion I followed carefully at Wright-Patterson was a undertaking planning new parachutes. As you may possibly assume, when the investigate team arrived up with a new style and design, they did not just strap a person in and examination it. In its place, they hooked up the parachutes to dummy rigs fitted with sensors and instrumentation. Two engineers would go up in an airplane with the components, dropping rigs and jumping alongside so they could notice how the chutes unfolded. Adhere with my tale and you are going to see how this turned critical to the advancement of that early AR technique.

Back again at the Digital Fixtures exertion, I aimed to establish the essential concept—that a serious workspace could be augmented with digital objects that truly feel so serious, they could guide people as they carried out dexterous handbook responsibilities. To check the plan, I was not likely to have buyers accomplish surgical procedures or restore satellites. As a substitute, I wanted a very simple repeatable job to quantify manual effectiveness. The Air Pressure previously had a standardized undertaking it experienced utilized for a long time to exam human dexterity below a wide range of psychological and bodily stresses. It’s termed the

Fitts’s Law peg-insertion endeavor, and it consists of possessing examination subjects quickly move metallic pegs concerning holes on a significant pegboard.

So I began assembling a method that would empower digital fixtures to be merged with a serious pegboard, developing a blended-actuality experience completely registered in 3D space. I aimed to make these digital objects come to feel so authentic that bumping the authentic peg into a digital fixture would truly feel as authentic as bumping into the genuine board.

I wrote computer software to simulate a large assortment of digital fixtures, from very simple surfaces that prevented your hand from overshooting a focus on hole, to carefully shaped cones that could help a user information the actual peg into the true gap. I made virtual overlays that simulated textures and had corresponding seems, even overlays that simulated pushing by a thick liquid as it it were being digital honey.

Just one imagined use for augmented actuality at the time of its creation was in surgical treatment. Right now, augmented reality is made use of for surgical education, and surgeons are beginning to use it in the running space.Louis Rosenberg

For far more realism, I modeled the physics of each digital component, registering its locale properly in 3 proportions so it lined up with the user’s notion of the genuine wood board. Then, when the consumer moved a hand into an region corresponding to a digital surface area, motors in the exoskeleton would physically drive again, an interface know-how now typically identified as “haptics.” It in fact felt so genuine that you could slide together the edge of a digital surface the way you could possibly move a pencil against a real ruler.

To accurately align these digital components with the true pegboard, I wanted significant-top quality video cameras. Movie cameras at the time ended up considerably far more high-priced than they are right now, and I had no dollars still left in my price range to buy them. This was a disheartening barrier: The Air Pressure had given me access to a vast selection of amazing components, but when it came to straightforward cameras, they could not assistance. It appeared like every single research project desired them, most of far larger priority than mine.

Which brings me back again to the skydiving engineers testing experimental parachutes. These engineers came into the lab one particular working day to chat they outlined that their chute experienced unsuccessful to open, their dummy rig plummeting to the floor and destroying all the sensors and cameras aboard.

This seemed like it would be a setback for my project as effectively, for the reason that I realized if there have been any excess cameras in the developing, the engineers would get them.

But then I requested if I could acquire a seem at the wreckage from their unsuccessful test. It was a mangled mess of bent steel, dangling circuits, and smashed cameras. Even now, though the cameras appeared terrible with cracked conditions and destroyed lenses, I wondered if I could get any of them to do the job very well sufficient for my needs.

By some wonder, I was ready to piece jointly two functioning models from the six that experienced plummeted to the ground. And so, the first human testing of an interactive augmented-reality technique was built possible by cameras that experienced practically fallen out of the sky and smashed into the earth.

To take pleasure in how significant these cameras were being to the process, think of a uncomplicated AR software right now, like

Pokémon Go. If you did not have a digicam on the back of your phone to capture and display screen the serious planet in real time, it would not be an augmented-fact experience it would just be a regular movie activity.

The exact same was true for the Digital Fixtures process. But many thanks to the cameras from that unsuccessful parachute rig, I was in a position to make a blended reality with precise spatial registration, supplying an immersive expertise in which you could attain out and interact with the true and digital environments concurrently.

As for the experimental portion of the venture, I carried out a series of human studies in which buyers professional a wide range of digital fixtures overlaid on to their perception of the authentic task board. The most helpful fixtures turned out to be cones and surfaces that could guidebook the user’s hand as they aimed the peg toward a gap. The most successful included actual physical ordeals that couldn’t be conveniently created in the serious world but ended up conveniently achievable virtually. For example, I coded virtual surfaces that were being “magnetically attractive” to the peg. For the consumers, it felt as if the peg had snapped to the floor. Then they could glide alongside it until they chose to yank totally free with a different snap. These fixtures amplified pace and dexterity in the trials by additional than 100 %.

Of the various applications for Virtual Fixtures that we regarded at the time, the most commercially feasible back then associated manually managing robots in remote or perilous environments—for instance, throughout hazardous squander thoroughly clean-up. If the communications distance launched a time delay in the telerobotic manage, virtual fixtures

became even far more precious for boosting human dexterity.

Today, researchers are nonetheless checking out the use of virtual fixtures for telerobotic applications with great success, like for use in

satellite repair service and robotic-assisted surgery.

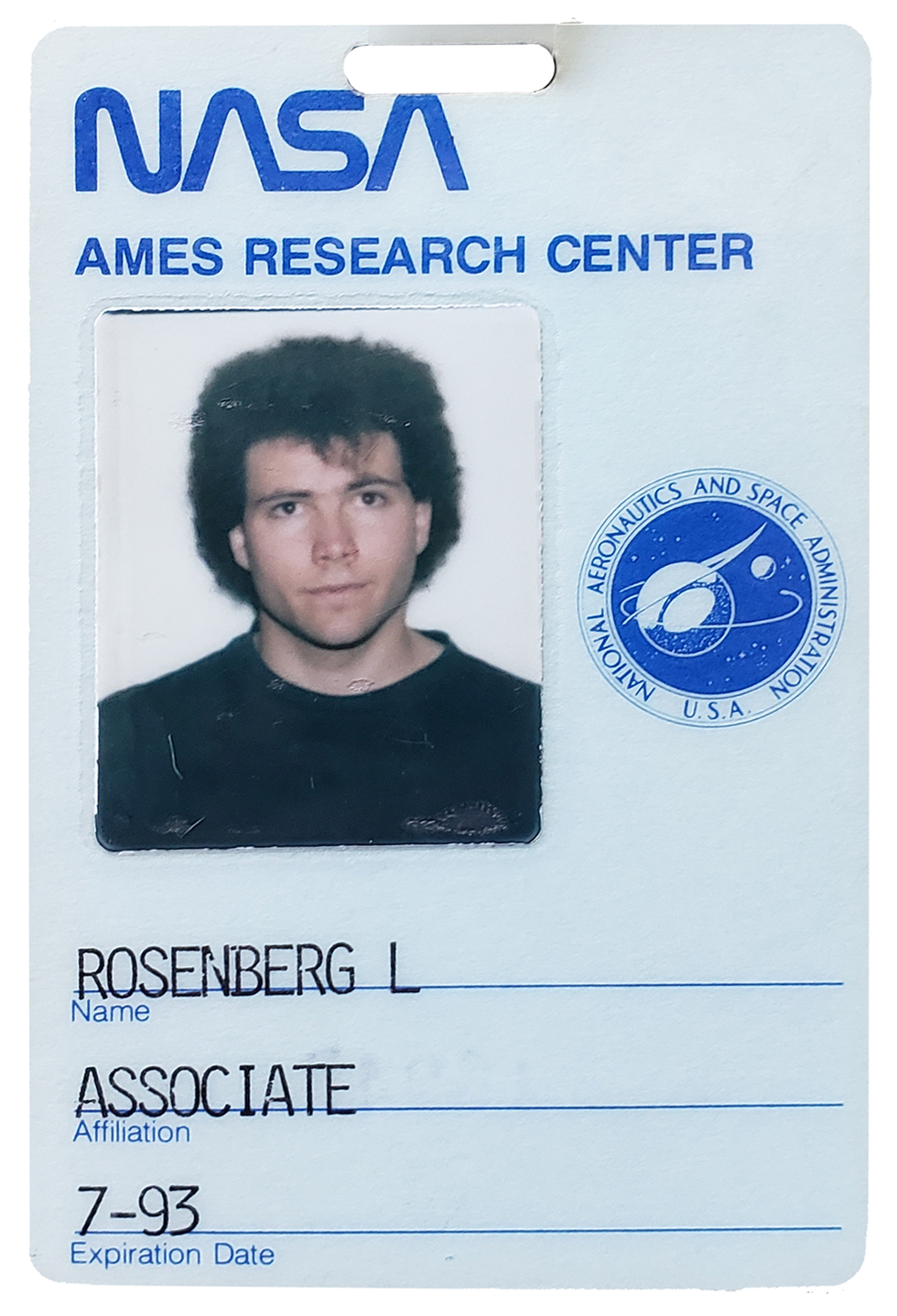

Louis Rosenberg used some of his time doing work in the Sophisticated Shows and Spatial Notion Laboratory of the Ames Investigation Centre as component of his analysis in augmented truth.Louis Rosenberg

I went in a unique route, pushing for more mainstream applications for augmented actuality. That’s mainly because the element of the Virtual Fixtures venture that experienced the biggest effect on me personally was not the enhanced overall performance in the peg-insertion undertaking. As a substitute, it was the big smiles that lit up the faces of the human subjects when they climbed out of the method and effused about what a remarkable working experience they had experienced. A lot of explained to me, with no prompting, that this sort of know-how would a person working day be everywhere you go.

And without a doubt, I agreed with them. I was persuaded we’d see this type of immersive engineering go mainstream by the close of the 1990s. In fact, I was so influenced by the enthusiastic reactions people today experienced when they attempted all those early prototypes, I established a firm in 1993—Immersion—with the objective of pursuing mainstream purchaser applications. Of course, it hasn’t happened nearly that speedy.

At the risk of becoming improper once more, I sincerely believe that that virtual and augmented actuality, now frequently referred to as the metaverse, will turn into an crucial section of most people’s life by the close of the 2020s. In actuality, primarily based on the the latest surge of financial investment by significant companies into bettering the technologies, I forecast that by the early 2030s augmented reality will switch the mobile cellular phone as our most important interface to digital content.

And no, none of the check topics who expert that early glimpse of augmented reality 30 yrs back understood they ended up working with components that experienced fallen out of an plane. But they did know that they were being amid the first to attain out and touch our augmented future.

From Your Internet site Articles

Relevant Articles All over the Web